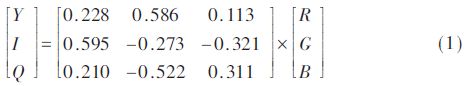

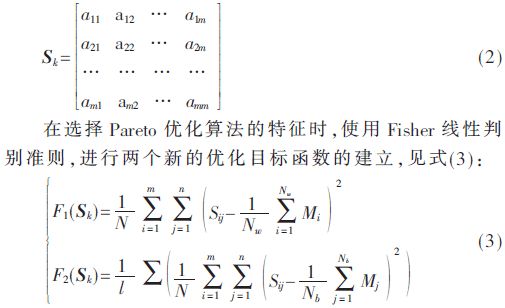

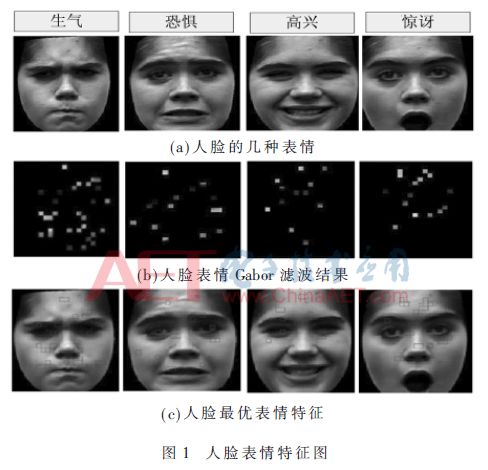

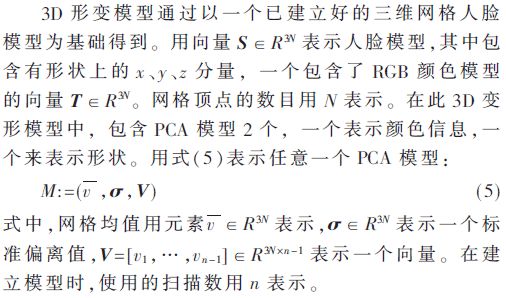

Summary: Based on the automatic expression combined with skin color and face movement, its recognition algorithm is studied. The image is converted to the YIQ color space by RGB, and the image data is extracted in the first dimension of YIQ, and the background and the skin color are separated in the binary image. The Pareto optimization algorithm is used to select facial expression features. The algorithm has less computational complexity, is simple in construction, and is fast in operation. It can be used to face facial skin at low angles, face facial expression changes, face rotation, face masks, etc. Accurate detection and tracking of conditions. Experiments show that the algorithm can adapt well to the small-angle rotation of human face; the algorithm is not affected by the state of the human eye; it can be better adapted to rich facial expression changes and different skin colors, and has certain stability. Sex. 0 Preface In recent years, with the increasing of computer technology, face real-time identification systems have developed rapidly and are generally used extensively in regulatory, search, and other related fields [1]. However, at this stage, the face recognition technology still has defects, and it is limited in practical application. In face recognition system, face detection is an important component, so the detection part has a very critical role. The face detection system has many detection algorithms, which can be summarized into two categories: The first type of detection algorithm is based on pixels. Features, while pixel features include contours, skin colors, etc.; the second type of detection algorithm is based on biometrics, which include microscopic features among pixels in the image, including the pixel's feature matrix, mean, etc. The corresponding algorithms include neural networks, Ada Boost, etc. [2]. Face color and motion recognition refer to the process of determining the color, size, and trajectory of a face in the image sequence [3]. The method based on motion information and skin color information has a faster implementation speed, but the background color distribution required by the skin color information is more stringent, the misunderstanding phenomenon is more, the recognition rate is low, and the face motion information needs to be further improved in the tracking accuracy [ 4]. Therefore, the detection and recognition methods based on motion information and skin color information are combined to improve the accuracy of the automatic expression recognition algorithm combined with the facial skin color and the motion recognition, which can meet the real-time monitoring requirements in practical applications [5]. In this paper, the recognition algorithm is studied based on the automatic expression combined with skin color and face movement. 1 Extraction of face complexion Skin color is an element of face information. Actually, different people's skin colors seem to have certain differences on the surface, but if you exclude brightness and other influencing factors, skin color shows a very high degree of clustering, and its color tone is basically the same [ 6]. In the RGB color space, since there is no specific brightness bit number information, the skin color does not have good clustering, and it is difficult to perform skin color area segmentation. In the YIQ color space, skin color has a high degree of clustering, but it is only clustered in the first dimension space of the color space. This phenomenon shows that the skin color segmentation algorithm has very little computational complexity and is very simple, and it can meet the speed requirements of real-time monitoring systems. The image is converted to YIQ color space based on the RGB color space. The conversion formula is: The skin color extraction process is divided into three steps: using the formula (1) to convert the image from the RGB color space to the YIQ color space; extract the image data information in the first dimension space of the YIQ; set an appropriate threshold value to separate the binary image In the background, color. 2 facial expression feature selection 2.1 Based on GA algorithm facial expression feature selection The main idea of ​​genetic algorithm is Darwin's theory of biological evolution. Through the simulation of the biological evolution process in nature, the iterative search of the optimal value is performed. The commonly used GA algorithm is a simple genetic algorithm, namely SGA. The genetic algorithm refers to an iterative method to search for a target problem and find the optimal solution that can solve the problem. In the process of initializing the target problem, individuals can be obtained based on the genetic code of the population. In general, an individual population actually belongs to an entity, and this part of the entity has chromosomal characteristics. The chromosomes carry genetic material, which is composed of many genes. Therefore, it is also represented by a combination of genes. The external performance of an individual is determined by the combination of genes. On the chromosome, hair color is determined by a combination of genes. Thus, the genes are encoded, ie the genotypes are represented by external mapping. 2.2 Based on improved Pareto optimization algorithm face expression feature selection Through the improved GA algorithm, the solution is optimized and the Pareto optimization algorithm is used to optimize the multi-objectives of each population F(Sk) (k=1, 2,..., N) to obtain the optimal max F(Sk). And get the solution Sk through equation (2): In the formula, the number of different kinds of expressions is denoted by l; Mi is the solution obtained from a type of expression by GA, and Nw is the number of solutions; Mj is a solution obtained from different categories through GA, and Nb is its corresponding The number of solutions. It can be seen from the objective function (3) that F2(Sk) corresponds to the expansion of the gap between classes, and F1(Sk) corresponds to narrowing the gap between classes. Figure 1 shows the selection of facial expression features based on GA and Pareto optimization algorithms. 2.3 Classification of Face Expression Features Based on Random Forest Method After selecting the best features, they will be categorized into expressions. The expressions include fear, anger, surprise, and happiness. According to the random forest classifier method, the accuracy of facial expression classification can be effectively improved. Random forests are a kind of combined classifier, essentially a collection of tree-like classifiers. Among them, the base classifier is a decision tree without pruning classification constructed by the classification-regression tree algorithm, and the output result is determined by a simple method of majority voting. The Gini coefficient index is a split criterion for the classification regression tree in the random forest algorithm. The calculation process is shown in equation (4): In the formula, mtry represents the characteristic dimension of each node; Pi represents the probability of appearing in the sample set S. 3 facial expression recognition method 3.1 Three-dimensional face deformation model Figure 2 shows the face color PCA model. The standard deviation of color coefficients is set to 2. In the first color model, the model is mainly to change the global skin model from black to white. In the second model, the model is mainly to change the gender of the face. The third model is the change of gender and skin color. 3.2 Pretreatment based on binocular 3D face pose The 67 alignment points in the face are aligned using the alignment algorithm SDM. The positions of the nose, eyes, and corners of the face in the SDM can be used as positioning markers. SDM defines an objective function, which is minimized by the algorithm. Solving this problem with a linear method is the core of SDM. See (6): Through the acquired angle, affine transformation is used to adjust the posture of the face. The affine transformation is performed by transforming coordinates. After the image is input, the coordinates are mapped to the output image coordinates after the image is input. Fig. 3 is the binocular face pose preprocessing. 4 Application of Support Vector Machine Algorithm in Target Tracking 4.1 SVM kernel functions Before the function calculation is performed, the transformation link is determined in advance. When the high-dimensional feature space is input and mapped, then the high-dimensional feature space needs to construct the optimal classification surface. At this stage, among the selection of the core function and parameters, artificial selection is still adopted, and the arbitrariness is large and the stability is difficult to meet. The kernel function refers to: Let E in the Euclidean space be the d dimension, a point in this space is denoted by x, the modulus of x is denoted by ||x||2=xTx, and the real set is denoted by R. If a function K:x→R, there is a function k:[0,∞]→R, that is, K(x)=k(||x||2), and k is a non-negative value; when k is non-incremental When the segments are continuous and meet the conditions 4.2 Training Process A support vector machine (SVM) is used to track the motion target, usually including image classification and training process. During training, the sample image with known classification results is input and image pre-processing is performed. The pre-processed image features are extracted by the feature extraction method and used as the input data of the SVM learner. The kernel function and parameter values ​​are adjusted to optimize the possible dimensions and deviations in the setup vector machine, optimize the selection mode of the classifier, and finally achieve the classification of the input training samples. The accuracy of the classifier is significantly improved. 4.3 Implementation of Dynamic Face Tracking When studying k-frames, the selected skin color model is mainly a hybrid type. The skin color of the image is input through segmentation. Based on the model specification, the skin color threshold is viewed to obtain the candidate face region; the optimized Sobel calculation is adopted. The sub-edge detection method detects the candidate regions, and uses the SNOW classifier to process the previously obtained results. The face information is detected and the face rectangle region is finally obtained. The rectangular region of the k-1th frame is compared with the human face rectangle region for the current frame. The motion state of the rectangular region of the face is obtained by linear prediction; the enhancement of the rectangular region image is performed using the FWT algorithm and the Haar wavelet transform, and the face information is verified by the SVM classifier. If the corresponding face information can be detected, then it can be marked as a face area; if no corresponding face information is detected, the target can be considered as lost, and within the allowable loss time range, the tracking and detection can be performed. +1 frame. If you exceed the allowable loss time range, you can end this tracking and start a new round of face recognition, tracking, and detection. 5 Analysis of experimental results Based on the algorithm flow given earlier, the accurate positioning and detection of face recognition tracking can be achieved, and the automatic expression positioning combined with face color and face movement can be achieved. The QC288 camera is used for image recognition tools. The images are all true color images with a resolution of 640×480. Figure 4 shows the results of partial human face tracking. The content marked by the circle is the face target in the image. Through the detection and tracking algorithm of automatic facial expression combined with facial skin color and facial movement in this article, accurate detection of facial skin color, face facial expression change, face rotation, and the presence of occlusion objects on face and face of small angles is accurately detected. And tracking. 6 Conclusion In this paper, the recognition algorithm is studied based on the automatic expression combined with skin color and face movement. The image is converted to the YIQ color space by RGB, and the image data is extracted in the first dimension of YIQ, and the background and the skin color are separated in the binary image. The Pareto optimization algorithm is used to select facial expression features. The algorithm has less computational complexity, is simple in construction, and is fast in operation. It can affect facial skin color, face facial expression changes, face rotation, and face facial features at small angles. Such conditions accurately detect and track. Experiments show that the algorithm can adapt to the small-angle rotation of the human face. The algorithm is not affected by the state of the human eye; it can be better adapted to rich facial expression changes and different skin colors, and has a certain degree of stability. Sex. COB Light

COB LED par light for theatre, productions, TV studio, stage

Description:

COB200-2in1 is a professional theatre fixture that utilizes a 200W warm-white and cold-white COB LED with a color temperature of 3200k-6000K. Users are able to creat a customized color tempreature via a DMX Controller or set directly on the display menu. It offers a high-power light output with rich hues and smooth color mixing for stage and wall washing. The double bracket makes installing easily and versatile.

Our company have 13 years experience of LED Display and Stage Lights , our company mainly produce Indoor Rental LED Display, Outdoor Rental LED Display, Transparent LED Display,Indoor Fixed Indoor LED Display, Outdoor Fixed LED Display, Poster LED Display , Dance LED Display ... In additional, we also produce stage lights, such as beam lights Series, moving head lights Series, LED Par Light Series and son on...

COB Light Series,Led Par Light,54 Led Par Light,Par Led Lights Guangzhou Chengwen Photoelectric Technology co.,ltd , https://www.cwstagelight.com

Then the kernel function is K(x).

Then the kernel function is K(x).