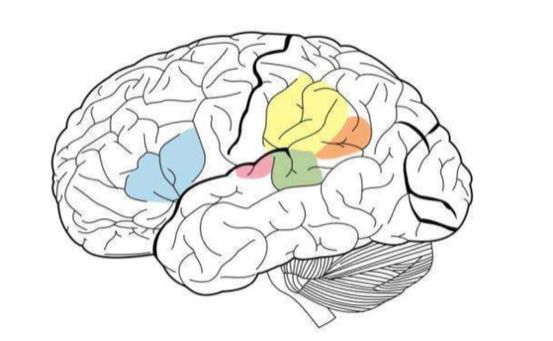

Researchers at the Massachusetts Institute of Technology (MIT) used deep neural networks in machine learning algorithms to create the first model that could simulate human performance on recognizing musical types and other auditory tasks. The model consists of a number of information processing units that train this model by inputting a large amount of data to accomplish a specific task. Researchers use this model to clarify how the human brain performs the same tasks. Josh McDermott said: "For the first time these models provide us with a machine system that can perform sensory tasks that are meaningful to humans, and it is done at the human level." He is the brain of the Massachusetts Institute of Technology. The assistant professor of Neuroscience Frederick A. and Carole J. Middleton, Department of Neuroscience, is also a senior author of this research. “Historically, this sensory approach is difficult to understand, partly because we do not have a very clear theoretical basis and there is no good way to model what may be happening.†The study, published in the April 19th issue of Neuron, also demonstrated that the human auditory cortex is arranged in a hierarchical organization like the visual cortex. In this type of arrangement, the sensory information undergoes continuous processing, basic information is processed earlier, and higher-level features, such as word meanings, are processed later. Alexander Kell, a graduate student at MIT, and Daniel Yamins, an assistant professor at Stanford University, were the lead authors. The other authors were MIT's former student Erica Shook and former MIT postdoctoral fellow, Sam Norman Haignere. Brain Modeling: Models Learn to Perform Tasks as Accurately as Humans When neural networks first appeared in the 1980s, neuroscientists hope that such systems can be used to simulate human brains. However, computers from that era were not strong enough to build enough models to perform some practical tasks such as object recognition or speech recognition. Over the past five years, with the advancement of computing power and neural network technology, it has become possible to use neural networks to perform these difficult real-world tasks, and they have become standard methods in many engineering applications. At the same time, some neuroscientists have re-examined whether these systems can simulate human brains. Kell said: "This is an exciting opportunity for neuroscience because we can create systems that can replace humans to perform certain tasks. Then we can test these models and compare them with the brain." MIT researchers trained their neural networks to perform two auditory tasks, one involving speech and the other involving music. In voice tasks, researchers provided thousands of two-second recordings of the model. The task is to identify the words in the audio. In the music task, the model is required to identify those types of two-second music clips. Each segment also includes background noise, making the task more realistic and more difficult. After completing tens of thousands of data training, the model learned to perform tasks as accurately as humans. Kell said: "The idea is that over time, the model is getting better and better in the mission. Hopefully it is learning something generic, so if you enter a model into a new sound it has never heard before It will do well. This has been proved in experiments." The model also tends to make mistakes on humans' most vulnerable mistakes. The processing units that make up the neural network can be combined in various ways to form different model structures that can affect the performance of the model. The MIT research team found that the best model for these two tasks was to divide the process into two groups. The first stage is shared between tasks, but after that, it splits into two branches for further analysis: one for speech processing tasks and the other for music processing tasks. Graded Evidence: Primary Auditory Cortex Different from Others The researchers then used their model to explore a long-standing problem with the auditory cortex structure: whether it is graded or not. In a grading system, a series of brain regions perform different types of calculations on sensory information flowing through the system. There is evidence that the visual cortex has the same type of organizational structure. The pre-region, known as the primary visual cortex, responds to simple features such as color or direction. The back-end area performs more complex tasks such as object recognition. However, it is difficult to test whether this type of tissue is also present in the auditory cortex, partly because there is not a good model to replicate human hearing behavior. McDermott said: "We believe that if we can build a model that performs the same tasks as humans, we can compare the different parts of the model at different stages of the brain with the brain, so that we can get some evidence to prove that some parts of the brain are Hierarchical organization." The researchers found that the basic characteristics of sound in their model, such as frequency, are more easily extracted in the early stages. As information flows back and forth on the neural network, some basic features are increasingly difficult to extract, and higher-level information, such as the meaning of words, becomes easier to extract. To verify whether the model stage can replicate the way the human auditory cortex processes sound information, researchers used functional magnetic resonance imaging (fMRI) to measure different regions of the auditory cortex when the brain processes real sound. Then they compared the difference between the brain and the model when dealing with the same sound. They found that the middle stage of the model had the highest similarity to the activity of the primary auditory cortex in the brain, and subsequent networks echoed activities outside the primary cortex. The researchers say that this provides evidence that the auditory cortex is arranged in a hierarchical manner similar to the visual cortex. McDermott said: "We very clearly see the difference between the primary auditory cortex and everything else." The author now intends to develop a model that can perform other types of auditory tasks, such as the location of vocalizations of specific sounds, to discuss whether these tasks can be accomplished through the ideas found in this article, or other tasks through the human brain. Study to get new ideas. Glass Metal Sealed Cap Glass Metal Sealed Cap YANGZHOU POSITIONING TECH CO., LTD. , https://www.pst-thyristor.com