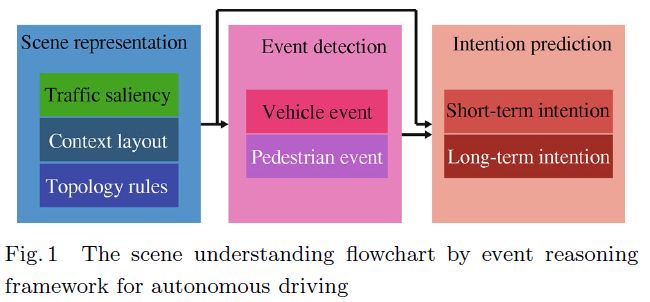

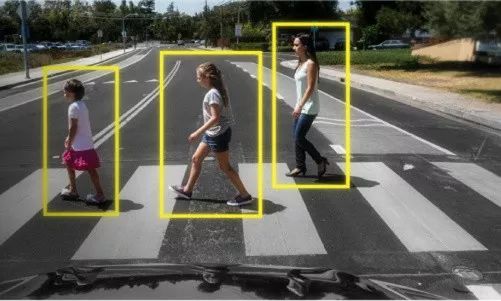

In the field of transportation, automation is one of the most popular research topics. Within 10 years, fully autonomous cars can be produced. ---2015 Nature Picture from SpringerLink Article excerpt Can you really build a fully automated car in 10 years? Frankly speaking, the realization of this goal will take more time for the current research progress and challenges. Development history of automatic driving The purpose of developing automated systems is to help people deal with some of their daily affairs. The autopilot system, which is very close to people's daily travel, has become one of the most concerned technologies. It frees people's hands from the steering wheel and frees up more time for other tasks. In addition, sensors equipped with self-driving vehicles can quickly identify the surrounding environment, ensure safe driving, and reduce traffic accidents. Picture from network At present, there are two forces that are advancing the research of autonomous driving: First, research plans and challenges initiated by various governments, research institutes, and producers; and the other is a lot of public benchmarking. 1) Research Projects and Challenges Since 1986, Europe initiated the "Intelligent Transportation Systems Project", referred to as PROMETHEUS, and more than 13 automobile manufacturers and research institutes from 19 countries have participated. Charles Thorpe and others from Carnegie Mellon University launched the first automated driving project in the United States. In 1995, the research project made great progress. The car was automatically driven from Pittsburgh, Pennsylvania to San Diego, California. In the same year, with the support of many studies, the U.S. government formed the National Association of Automatic Highway Systems (NAHSC). A series of projects have promoted the study of long-term systems for highway scenarios. However, these studies have never involved urban scenarios. Picture from network In fact, urban scenes are closely related to people's daily lives. The "DARPA Super Challenge" organized by the US Defense Advanced Research Projects Agency (DARPA) has greatly accelerated the development of autonomous vehicle research. The first and second sessions were held in 2004 and 2005 respectively. On November 3rd, 2007, the 3rd DARPA Challenge - "City Challenge" kicked off at George Air Force Base in Victorville, Calif., to test the performance of autonomous vehicles in the Mojave Desert. It is stipulated that the vehicles, while observing traffic regulations, must also be able to communicate and coordinate with other vehicles and obstacles and fully integrate into the entire traffic scene. In six hours, four entry teams completed the route. Picture from network In 2009, the National Natural Science Foundation of China launched the "China Smart Car Future Challenge" (iVFC). As of November 2017, 9 sessions have been successfully held. Google also launched the autopilot research project in 2009 and has completed more than 5 million miles of automated driving tests by March 2018. In 2016, the project department developed Waymo, an independent company that researches autonomous driving technology. In October 2016, Tesla released Autopilot 2.0, equipped with multiple cameras, 12 ultrasonic sensors, and a forward radar. All vehicles equipped with Autopilot 2.0 have automatic driving capabilities. Picture from network In fact, more and more car manufacturers, such as Audi, BMW, Mercedes, etc., have begun to study their own self-driving cars. 2) Benchmarks In 2012, Andreas Geiger and others proposed the KITTI vision benchmark, which contains six different city scenes and 156 video clips of 2-8 minutes duration. The data was collected from a car equipped with a color and black-and-white camera, a Velodyne 3D laser scanner, and high-precision GPS/IMU inertial navigation systems. At the same time, Cambridge University released the CamVid dataset, which contains video sequences of four city scenes, providing an evaluation benchmark. Another popular test is the Cityscapes data set published in 2016, which collected scenes from 50 cities, containing 5,000 fine-annotated images and 20,000 coarse-annotated images. Cityscapes have become the most challenging dataset for completing semantic segmentation tasks. Picture from article Annotation is a time-consuming and laborious task. Based on this, Adrien Gaidon et al. used computer image technology to construct a large-scale virtual data set similar to the KITTI data set. The advantage of a virtual data set is that it can generate any desired task, even if the task is very rare. However, for complex and diverse scenarios, the life cycle of these tests is short lived. In order to solve this problem, Will Maddern and others repeatedly traveled more than 1,000 kilometers and collected more than 20 terabytes of images, LIDAR, and GPS data through a course in the center of Oxford University within one year. This dataset reflects more changes in urban scenes, lighting, and weather, but the lack of adequate annotation. In addition to configuring various sensor systems, some researchers also focus on full view calibration. By installing multiple cameras for the test vehicle, they collect data from different perspectives, such as LISA-Trajectory, PKU-POSS data sets. The difficulties in the development of automatic driving technology At present, the development of autonomous driving technology mainly faces the following difficulties: 1) At present, environmental awareness, such as the detection, tracking and segmentation of participants in traffic scenes, still causes unavoidable mistakes in the real environment. 2) The driving environment is very complex, unpredictable, and changes in real time, with uncertainty. 3) Research on deep traffic scene understanding is far from enough, such as understanding the geometry/topological structure of the scene, the temporal and spatial changes of participants (pedestrians, vehicles, etc.), and the ultimate goal of such research is semantics. The scene evolvement is inferred to provide a reference for action planning and automatic driving control, but this research is very difficult to carry out because these factors are hidden in the autopilot environment and cannot be directly observed. 4) The application of self-driving vehicles encounters social resistance and moral inquiry. Structure of this article This article focuses on the deep understanding of traffic scenes of self-driving vehicles and aims to explore the evolution of traffic scenarios from an event reasoning perspective. Because of the traceable inference strategy, events can reflect the dynamic evolution of the scene. In order to present this study more clearly and logically, the article infers events from the three stages of representation, detection, and prediction. Picture from article In the presentation stage, the author explores the saliency, contextual layout, and topology rules of autopilot in detail, aiming to obtain high-quality clues for the following two phases. At the detection stage, the author reviews event detection from the perspective of different participants, such as pedestrian perspective and vehicle perspective. In the prediction stage, the article focuses on the intention of developing autonomous vehicles and classifies them into long-term intention prediction and short-term prediction. Picture from network In addition to these phases, there have been end-to-end approaches for understanding autopilot scenarios in recent years, such as FCN (fully convolutional networks) and FCN-LSTM. Part 5 of the article focuses on this type of approach. In addition, the article also discussed some open issues and challenges, and tried its best to give some feasible solutions. The structure of the full text is as follows: The first part is the introduction, the second part discusses the representation of scenes, paving the way for the following event inferences; the third part reviews pedestrian and vehicle event detection; the fourth part outlines intention prediction; the fifth part introduces Based on deep learning techniques, end-to-end frameworks for direct reasoning; Section 6 focuses on evaluation metrics and associated data sets for event reasoning; Part is the conclusion of this article. Full text information A Survey of Scenery by Event Reasoning in Autonomous Driving Jian-Ru Xue, Jian-Wu Fang, Pu Zhang Summary: For example, realizing autonomy is a hot research topic for automatic vehicles in recent years. For a long time, most of the efforts to this goal concentrate on understanding the scenes surrounding the ego-vehicle (autonomous vehicle itself). By completing lowlevel vision tasks, such as Mapping, tracking and segmentation of the paracourse traffic participants, eg, pedestrian, vehicles, the scenes can be expressly written. However, for an autonomous vehicle, low-level vision tasks are rated as to give help to comprehensive scene understanding. What are On the past and the future of the scene participants? In autonomous driving from an event reasoning view. To reach this goal, we study the most relevant literatures and the state-of-the-arts on scene repr Esentation, event detection and inference prediction in autonomous driving. In addition, we also discuss the open challenges and problems in this field and endeavor to provide possible solutions.

UCOAX Custom Made HDMI 2.1 Cable with different connectors and length.

SUPPORTS THE LATEST 8K resolution at 60Hz. 4K supports at 120Hz. Max resolution up to 7680x4320. Supports 144Hz monitors.

HDR HIGH DYNAMIC RANGE for the best details and color depth. Wider range of colors, brighter whites, and deeper blacks.

HDMI 2.1 Cable for XBOX SERIES X, PLAYSTATION 5, 8K/4K BLU-RAY PLAYERS, Apple TV, PS4, XBox One X, XBox One S, Roku Ultra, High End Gaming PC's and other HDMI-enabled devices to 4K & 8K TVs, Monitors & Projectors.

Hdmi 2.1 Cable,8K Hdmi Cable,Hdmi 2.1 Certified Cable,Best Hdmi 2.1 Cable UCOAX , https://www.jsucoax.com

AUDIO RETURN CHANNEL (ARC) and Ethernet. Best cable for gaming with features like Variable Refresh Rate (VRR), Quick Media Switching (QMS), Quick Frame Transport (QFT)